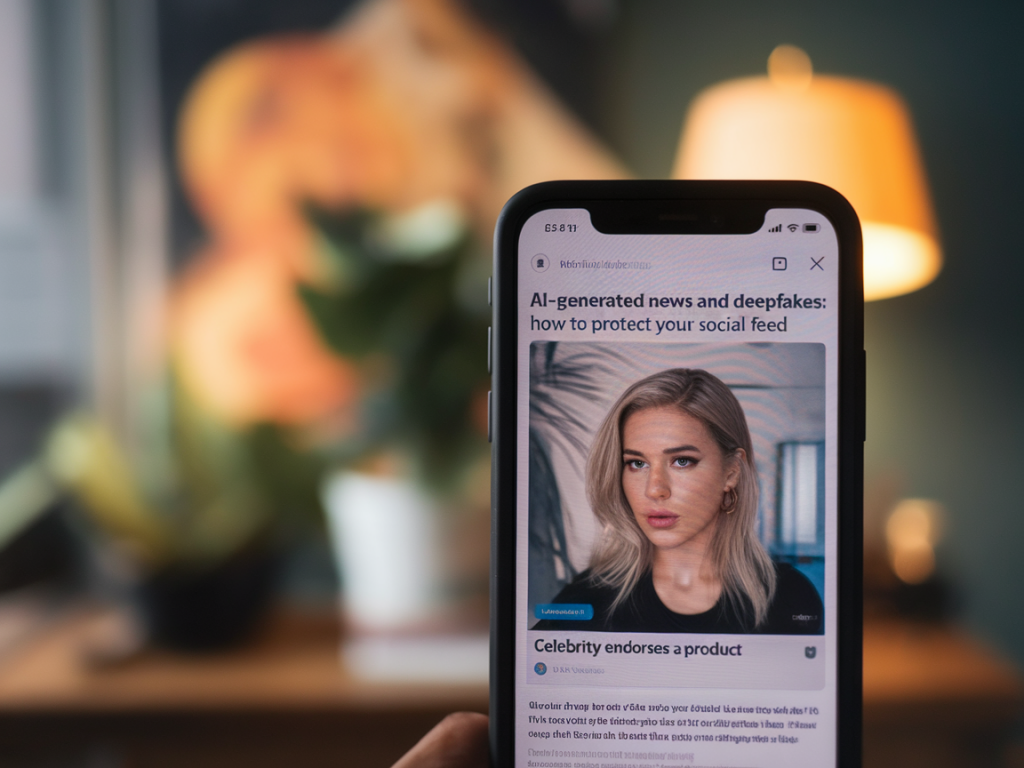

Social feeds are where I start my day and where I see the world change in real time. That’s both a privilege and a responsibility — especially now, when AI can produce convincing news stories, images, and videos that never happened. Over the last few years I’ve learned to treat my feed like a newsroom: skeptical, curious, and methodical. Below I share the practical checks I run whenever something viral lands in my timeline, plus tools and habits that have helped me filter deepfakes and AI-generated news before I share or react.

Why this matters — fast

AI-generated content isn’t just a novelty. It can sway opinion, amplify falsehoods, and make coordinated disinformation more efficient. A well-produced fake video or a polished, fabricated article can look like a breaking scoop. If you re-share that content without checking, you become an unwitting amplifier. I don’t want to be that person, and I don’t want readers of Latestblog Co to be misled — so I’ve built a short, repeatable routine that helps me spot fakes quickly.

First glance checks — the 60-second triage

When something looks noteworthy or outrageous, I give it a quick, 60-second triage. That rapid scan usually tells me whether to dig deeper or move on.

Check the source. If it’s a known outlet (BBC, Reuters, AP) I still scroll to the byline and the author’s feed. If it’s a new site, I look at the domain carefully: misspellings, odd TLDs, and recent registration are red flags.Read beyond the headline. AI can make sensational headlines that aren’t supported by the story. If the article’s body doesn’t back up the claim or relies mainly on anonymous sources without verification, be skeptical.Look for corroboration. If real newsworthy events have occurred, multiple reputable outlets will usually report them within minutes or hours. One standalone report with no corroboration is suspicious.Images and videos — how I verify visual content

Visuals are where deepfakes shine. I always remember that images flatter and videos can be manipulated. Here’s my visual verification playbook.

Reverse image search. I drag the image into Google Images or use TinEye to see if the photo is recycled from another context. For videos, I check screenshots of key frames.Metadata and source files. Photos and videos often have EXIF or other metadata that reveal where and when they were taken. Tools like FotoForensics and Jeffrey’s Exif Viewer can help, though many social platforms strip metadata.Look for physical inconsistencies. Shadows that point in different directions, mismatched reflections, strange blinking patterns, or inconsistent lip-syncing in a video are giveaways.Frame-by-frame inspection. Tools like InVID (a browser extension) let me break a video into keyframes and run reverse searches on each one. That often reveals earlier versions or the original source.Language and style clues — what AI tends to get wrong

AI-generated text has improved enormously, but it still leaves telltale fingerprints. When I read a suspicious article, I watch for these signs:

Repetition and awkward transitions. AI sometimes repeats phrases or jumps between topics without clear logic.Overly generic sourcing. Phrases like “experts say” or “sources told us” without names or verifiable institutions can indicate weak or generated reporting.Vague details. If the piece includes lots of confident statements but no concrete facts (dates, quotes, place names), I treat it cautiously.Too-perfect language. Polished prose that lacks distinct voice or regional nuance can be a hint — though not a proof — of AI drafting.Tools I use and recommend

I rely on a small toolkit I can access on desktop and mobile. You don’t need to be a tech expert — these tools are designed for everyday users.

Reverse image search: Google Images, TinEye, DuckDuckGo image search.Video verification: InVID/WeVerify, Amnesty’s YouTube DataViewer.Metadata: FotoForensics, Exif.tools.Browser extensions: NewsGuard (flags questionable news sites), Sensity (deepfake detection), and browser plugins that show site ownership details and content age.Fact-checking sites: Snopes, FactCheck.org, PolitiFact, and for international stories, AFP Fact Check and AP Fact Check. I also check X (formerly Twitter) threads from reputable journalists who often surface original sources quickly.How to protect your feed — practical habits

Beyond verifying individual items, I’ve adopted habits that reduce exposure to misinformation and give me more time to check before reacting.

Curate who you follow. Follow primary sources (official accounts, verified journalists, local stations) instead of accounts that push sensational takes. I regularly prune accounts that consistently amplify unverified claims.Limit algorithmic amplification. On platforms where you can choose a chronological feed, I switch to it when I’m researching a breaking event. That reduces the chance the algorithm will amplify unverified viral posts.Turn off auto-play. Videos can play silently and mislead before you have time to inspect them. Auto-play also boosts engagement for misleading content.Pause before sharing. I try to wait at least 10 minutes and run through my quick verification steps before sharing anything shocking.When you can’t verify — what to do

Not everything is easily verifiable in 10 minutes. When I can’t confirm something, I label my response accordingly or don’t share it at all.

Use cautious language. If I must comment, I write that the claim is unverified and explain what I couldn’t confirm.Flag for platforms. Use reporting tools on Facebook, X, TikTok, or Instagram to flag suspicious content. Platforms often take action after multiple reports.Ask experts. Post the claim to credible groups — journalists, local reporters, or fact-checking communities — and ask if anyone can verify it. I’ve often found journalists who are already on the case and can provide sources.A few myths about AI detection

There are false beliefs that can lull people into a dangerous complacency. I want to bust a few:

Myth: “Watermarks or labels always indicate AI.” Platforms are starting to label AI content, but not all creators comply and labels can be removed or faked.Myth: “If it looks human, it’s real.” Deepfakes are increasingly realistic. Always verify the provenance, not just the production quality.Myth: “Only state actors use deepfakes.” Anyone with access to consumer-grade AI tools can create convincing fakes. The risk isn’t just sophisticated actors; it’s scale and accessibility.What platforms and companies are doing — and what I wish they would do

Platforms have improved detection and labeling, and tools from companies like Microsoft, Google, and startups such as Sensity are promising. Still, I want stronger provenance standards: cryptographic provenance (signed origin data) embedded in media files, better cross-platform sharing of takedown information, and faster cooperation with independent fact-checkers. Until then, the responsibility falls partly on each of us to verify before we amplify.

A quick on-the-go checklist

| Step | What I look for |

| Source | Domain authenticity, known outlet, author biography |

| Corroboration | Other reputable outlets or official statements |

| Visual check | Reverse image search, frame inspection, metadata |

| Language | Specificity of facts, names, quotes, stylistic oddities |

| Actions | Wait, report, ask experts, don’t reshare until verified |

These steps have made me more confident on social media without turning me into an unmovable skeptic. The goal isn’t to mistrust everything — it’s to build habits that protect the conversation from being hijacked by slick, harmful fakes. If you make even a few of these checks part of your routine, you’ll reduce the chance of spreading misinformation and help make your feed a little bit more honest.